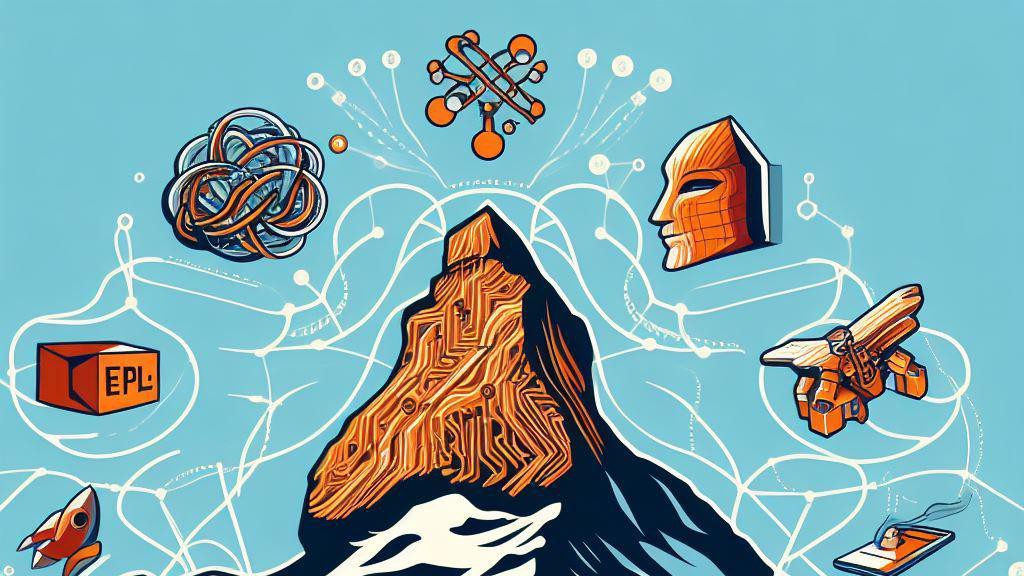

Tensor networks, tensor cumulants, and tensor factorization

Event details

| Date | 15.05.2024 |

| Hour | 10:15 › 11:15 |

| Speaker | Cris Moore |

| Location | |

| Category | Conferences - Seminars |

| Event Language | English |

Statistical physics and statistical inference have a deep connection, and ideas continue to flow between the two fields. Consider the following problem in high-dimensional statistics: there is a hidden vector v, and we observe v’s p-th outer product with itself, giving a tensor of arity p, with Gaussian noise in every entry. Given this observed tensor, our goal is to reconstruct v, as well as to reject the null hypothesis that the tensor consists only of noise. To a physicist, this is a planted p-spin model, where p is the arity of the tensor, and where the coupling matrix is correlated with the hidden vector. To a computer scientist, it is a tensor version of PCA (principal component analysis). But we lack a theory of linear algebra for tensors, so we can’t simply look at the dominant eigenvector and hope it is correlated with v. What should we do instead? What is the “spectrum” of a tensor anyway?

Many algorithms approach problems like this by “flattening” the tensor into a matrix, and then looking at the spectrum of the resulting matrix. But a more natural approach is to form a tensor network with copies of the observed tensor, and contract it to obtain a scalar or vector. I’ll describe how this picture helps us understand a conjectured phase transition where this problem becomes exponentially hard, and provides algorithms that work all the way up to that transition.

This is joint work with Tim Kunisky (Yale) and Alex Wein (UC Davis).

Practical information

- Informed public

- Free

Contact

- lenaic.chizat@epfl.ch